Excuse Me. Do You Speak Human?

The way you interact with your computer, phone and tablet is all set to change... forever

If looks could kill? Mine do. They just slayed a melon, and cleaved a grapefruit clean in two. I’m playing Fruit Ninja. With my eyes.

Danish tech-wizards at The Eye Tribe have developed eye tracking software that uses the standard camera on your tablet, plus a sprinkling of infra-red lights. The cost of adding this to a tablet design? Tiny. The result? Amazing.

You won’t see this technology in any phone or tablet. Yet. But it’s coming, along with a host of other changes to the interface between man and machine on show at this year’s Mobile World Congress. Computers, phones and tablets are starting to speak our language.

Talking Proper

Ten years ago if you wanted to talk to a computer you had to learn its language. Keyboards and mice are not natural ways for people to interact. We have to study how to use them, along with the conventions of the graphical user interface. If we really want to go native we have to learn the syntax and vocabulary of programming languages.

These interfaces aren’t going away. They are powerful, and most importantly, high bandwidth: you can communicate a huge amount of information, accurately and relatively quickly with a keyboard and mouse. But there is a new layer of natural interaction appearing that means we will need to resort to these mechanical means less and less.

Image credit: cype_applejuice

Move Your Body

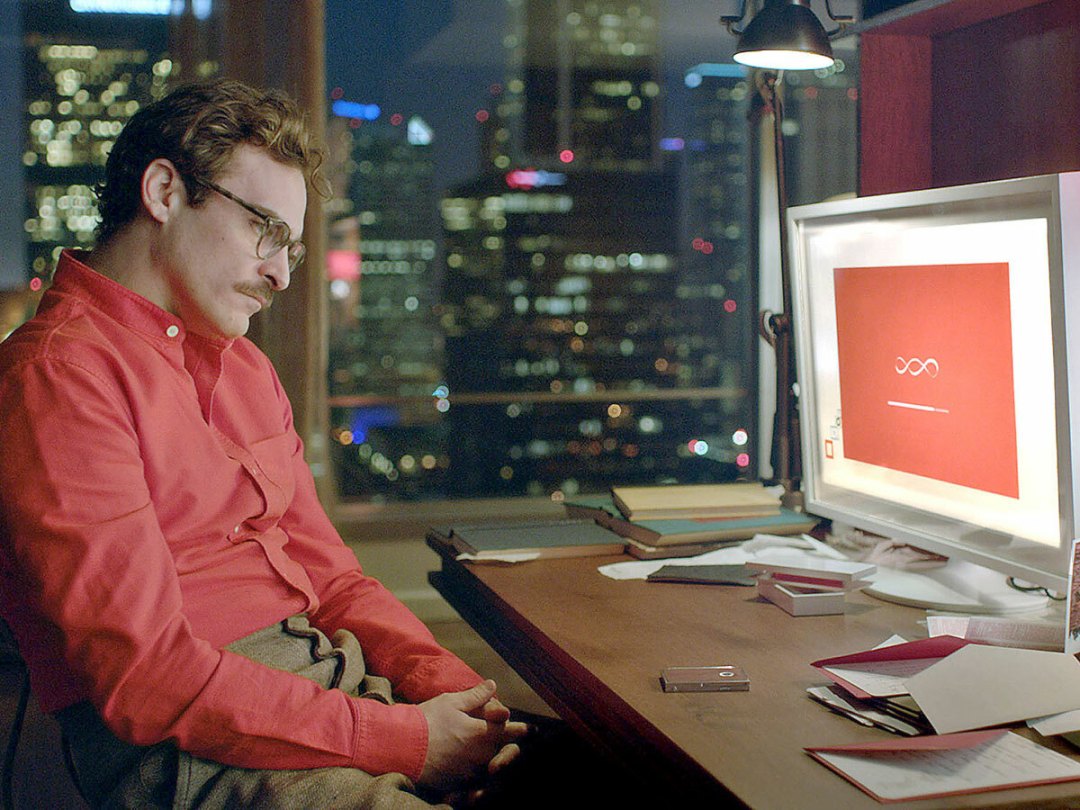

It started with touch, of course. Voice is becoming more and more practical, though we’re a way off Her levels of chat (probably a good thing – we spend enough time with our computers without actually dating them). Biometrics went mainstream with the last generation of phones, fingerprints and faceprints doing the unlocking. More is coming.

Machines are paying attention to our body language. Where we look. Or a wave of the hand. Elliptic Labs‘ ultrasound-based gesture controls will appear in a phone from “a tier one manufacturer” later this year according to its CEO, Laila Danielsen. A step up from the camera-based interactions we’ve had to date, they mean your phone can always be aware of you, without running down the battery. Wake it up with a wave of your hand. Scroll through alerts without even touching the screen. Whatever the light conditions, it works.

Put all these technologies together and the result is that we stop thinking about how we interact. We do what feels natural, based on the language we have learned to use with people, and the gestures we use to manipulate physical objects. The machines do the work to understand us.

Disappearing Gadgets

We’ve watched computers get smaller and smaller for the last 60 years, and that trend is continuing. Also demonstrated at Mobile World Congress were tiny GPS chips from Broadcom, and a lens-less camera the size of a pinhead from Rambus. Computers and their peripheral parts are now small enough to cram into clothes, jewellery, anything.

But the size of the components is not the only factor limiting the shrinking of devices. We have maintained gadgets in a package like a phone or tablet in part because we have needed a single point on which we can focus and tap. If anything they have got bigger in recent years with screen sizes growing to four, five, or six inches. When we don’t need that focus any more, that place to put our fingers, the gadgets can go away. Disappear.

As gadgets increasingly speak our language we will see them melt away into the background, where they can do our bidding at the wave of a hand or a flick of the eyes.

Tom Cheesewright is an Applied Futurist. He will be speaking at Daisy Telecom’s Wired? 2014 event on 7th May. Details here.

READ MORE: Pointing to the future – five controllers that’ll replace your mouse